The increasing presence of robots in society calls for a deeper understanding into what attitudes humans have toward robots. People may treat robots as mechanical artifacts, or may consider them to be intentional agents.

This might result in explaining robots’ behavior as stemming from operations of the mind (intentional interpretation), or as a result of mechanical design (mechanical interpretation). A quick look at the robotics industry market size research shows that robots including industrial, commercial, social, and humanoid robots are here to stay. The sooner humans get used to this fact and adapt to co-living and co-working with humanoid robots the better.

The Global Commercial Robotics Market Size is projected to reach $24,760 million by 2026, from $8,310.7 million in 2020, at a CAGR of 20.0 percent During 2021-2026, according to Industry Research Biz.

And, according to a Mordor Intelligence report, Social Robots Market: Growth, Trends, and Forecasts (2020-2025), the social robots market is estimated to grow at a CAGR of about 14 percent over the forecast period 2020 to 2025. The rise of research in the field of Artificial Intelligence (AI), Natural Language Processing (NLP), and development platforms such as the Robotic Operating System has enabled the rise in a class of robots called Social Robotics.

Social robots rely on Artificial Intelligence (AI) to interact with the information received through the camera (usually the eyes) and other sensors. It leverages advances in AI that enables the robot designers to help translate both neuro-scientific and psychological insights into algorithms.

Thereby allows them to recognize faces and emotions, voices, interpret speech, and respond appropriately to their needs. This instance has led to a rise in the applications of social robots across various industries.

In 2019, the World Economic Forum (WEF) reported on emerging technologies saying that “the field of social robotics has reached a tipping point wherein the robots have greater interactive capabilities and can perform useful tasks than ever.

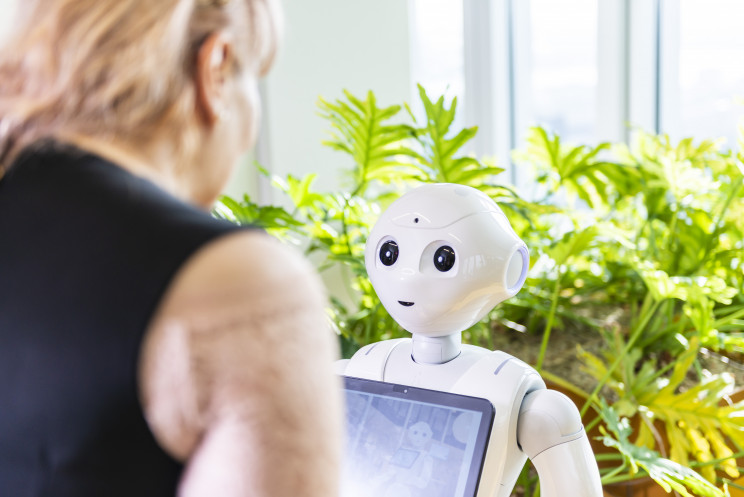

One of the most well known social robots is Pepper, developed by Softbank Robotics. There are over 15,000 Peppers deployed worldwide. They are used to perform services such as hotel check-ins, shopping assistance, airport customer care, and customer service at City offices, such is the case of Pepper (pictured above), deployed by the City of Rotterdam in the Netherlands.

Social robots are also being deployed in the retail sector to guide customers around the store. Peppers can be found in the hospitality industry, banks, shopping malls, and family entertainment centers as well as other customer-oriented sectors with banks and retail topping the list of adoption.

Social robots have found a place in education, helping students overcome lack of interest in certain subjects and aiding teachers as real motivators. Now more than ever, the chances that you will find a robot in the room are high, and increasing as we speak.

There is a robot in the room: The dynamics between humans and humanoid robots –Androids and Gynoids

In the same way that humans are not the same, there is also variety in the world of robots. A humanoid robot is a being who has the appearance or characteristics of a human being, whether a woman, man, or child.

There are different types of humanoid robots as well. Androids are humanoid robots designed and built to resemble a male human, whereas Gynoids are built to resemble human females.

Based on this, a human’s attitude toward the robot in the room will be different if it is a robot that vacuums a floor or a humanoid robot –Android or Gynoid– who serves in a restaurant or household.

The goal of the humanoid robotics is to create machines that behave as if they were humans. The ultimate goal is to engineer and embed humanoid robots with human-like-intelligence, reaching the next-level within the types of Artificial Intelligence, Artificial General Intelligence (AGI). These are humanoids expected to live, work, and co-exist with human beings triggering an array of different individual reactions an attitudes toward the humanoid in the room, humanoids that are about as capable as humans.

And the question arises, can we accurately learn from human general attitudes toward robots, including social acceptance and social acceptability? Researchers found the answer in brain wave signals.

Brain wave signals reveal human attitudes toward the robot in the room

Scientific research on robotics and Artificial Intelligence has been one of the most exciting ones for the last decades. Recently, the urgent need to address topics such as human-robot interaction has prompted cross-university collaborations. In this occasion, we look at how brain wave signals reveal about human attitudes toward encounters with humanoid robots.

A new study of electrical activity in the brain of 52 people shows that it might be possible to measure and predict humans’ attitudes toward a nearby robot even before they observe its behavior and interact with the robot. Brain wave measurements –during what the researchers call resting state– differed between humans who later perceived the robot’s actions as intentional, versus those who believed the robot acted according to its programming.

“Arguably, a crucial prereq

uisite for individual and social trust in [robots and automated vehicles] is that people can reliably interpret and anticipate the behavior of autonomous technologies to safely interact with them,” writes Tom Ziemke in a related Focus. Researchers generally use questionnaires to evaluate human interpretation of robotic behavior, but these survey strategies lack neurological detail and can introduce biases.

The study, “The Human Brain Reveals Resting State Activity Patterns That are Predictive of Biases in Attitudes toward Robots,” was a collaboration between Francesco Bossi, Cesco Willemse, Jacopo Cavazza, Serena Marchesi, Vittorio Murino, Agnieszka Wykowska at Istituto Italiano di Tecnologia in Genoa, Italy; Francisco Bossi at IMT School for Advanced Studies Lucca in Lucca, Italy; Vittorio Murino at the University of Verona in Verona, Italy; Vittorio Murino at Huawei Technologies Ltd. in Dublin, Ireland; Agnieszka Wykowska at the Luleå University of Technology in Luleå, Sweden.

The researchers examined whether individual attitudes toward robots can be differentiated on the basis of default neural activity pattern during resting state, measured with electroencephalogram (EEG).

Participants experienced scenarios in which a humanoid robot was depicted performing various actions embedded in daily context. Before they were introduced to the task, the researchers measured their resting state EEG activity.

According to the scientists’ report, they found that resting state EEG beta activity differentiated people who were later inclined toward interpreting robot behaviors as either mechanical or intentional. “This pattern is similar to the pattern of activity in the default mode network, which was previously demonstrated to have a social role.”

In addition, gamma activity observed when participants were making decisions about a robot’s behavior indicates a relationship between theory of mind and said attitudes. Thus, the researchers provided clear evidence that individual biases toward treating robots as either intentional agents or mechanical artifacts can be detected at the neural level, already in a resting state EEG signal.

On September 30, 2020, the research paper was published in Science Robotics, which is published by the American Association for the Advancement of Science (AAAS), a non-profit science society.

Francesco Bossi and his colleagues found that a person’s electroencephalogram (EEG) activity in the presence of a humanoid robot predicted how the person would later explain the robot’s actions.

Participants who later perceived the robot’s behavior as subject to its programming and without intention showed higher beta wave activity in certain brain regions, and lower gamma activity in other regions during resting state observations.

The results were then compared with participants who were more likely to attribute intentionality to the robot’s actions. The researchers note that increased beta activity suggests a greater tendency, at resting state, to attempt to make sense of oneself and others.

By contrast, according to the researchers, higher gamma activity is indicative of a mental state where one ascribes their own thoughts, feelings, desires, and emotions to others – leading some participants to perceive the robot’s actions as intentional. Francisco Bossi and the group of scientists’ results could, in the future, help robot designers in the process to develop more socially acceptable designs for robots, says Tom Ziemke.

Human + humanoid interaction

By the end of the study, the researchers concluded that, indeed, it is possible to predict attitudes that humans have with respect to artificial agents, humanoid robots specifically, from EEG data already in the baseline default mode of the resting state.

According to the paper, this casts a light on how a given individual might approach humanoid robots that are increasingly occupying our social environments around the world. Decoding such a high-level cognitive phenomenon from the neural activity is quite marked and can be highly informative with respect to the mechanisms underlying attitudes that people adopt.

It might be that the intentional/mechanistic bias in attitudes toward robots is a similar mechanism to other biases such as racial and gender biases. The researchers anticipate that future studies might address the question of whether the neural correlates of biases in attitudes toward robots generalize to other types of biases as well.

“The present study, however, does not address the issue of whether the observed differential effect across participants is related to a particular context in which they observe the robot, particular robot appearance, or a general attitude that a given individual has toward robots.”

Future research should address the question of whether the neural correlates of the biases/attitudes observed in this study are signatures of a general individual trait or are rather related to a given state or context. In either case, the research shows that there are detectable neural characteristics underlying the likelihood of treating robots as intentional agents or, rather, as mechanistic artifacts.

Related Articles:

More Stories

The Oracle 9i OCA and OCP Certifications Are Being Retired – What Does That Mean to Me?

Myth Busted: Cell Phone Tower Radiation Does Not Cause Harm

The Science Of Psychology – An Oxymoron?